Making the DumTeeDum map part 2.

Sketching out ideas.

Our process starts by defining the outcome and working out the best/most efficient way to achieve it.

We then build and test in tight iterations, changing tack as new ideas come in and limits are reached. We try to grow our products organically based on user feedback and demand instead of putting too much up-front work on something which nobody wants. (We learned this the hard way).

The original outcome was defined:

"Help people find other Archers fans nearby so they can meet up."

Great! We're helping connect people! I can get onboard with that.

The most obvious idea is a map. Maps have built-in discoverability and a great way to convey information visually. Everyone can read a map, right?

Hatching the eggs.

For v0.1 we decided to integrate with Twitter to start with... Tweet your location and appear on the map.

This is where it started to get complicated. Twitter provides geolocation details but only when the user's security settings allow it. This was unreliable, so we decided to get the user to tweet their location.

We wrote a Twitter bot in Python3 which followed a hashtag and geocoded the locations that were tweeted.

Initial tests worked OK as long as you typed in exactly what the bot expected. In the end, most of the bot code was sanitising, spell-checking and tokenising the input.

There's two Bostons?

Yes, we slammed straight into the old chestnut that many town names exist in more than one country.

Solved, by conversational UI! Maybe.

This was genius! We'd just program the Tweet bot to ask the original tweeter for clarification of what country they are in, with a numbered list like:

- United Kingdon

- United States

- France

- Germany

(It's surprising how many countries share the same town names.)

This also worked well, but the bot is starting to get complicated. It now has to backtrack up the thread to work out what the responses relate to. This would prove to be the achilles heel, but initial tests proved it would actually work.

Happy we could get the data out of social media, we just needed to get it into the map. For this we'd use a standard called GeoJSON which is widely used and well documented.

We didn't want the overhead of database lookups but were concerned with how big the GeoJSON feed might get if a lot of people joined the map.

Geohashes to the rescue!

Geohashing basically draws a grid around the world and gives each square a text short code. Any points in the same square have the same geohash. For example: Salisbury, UK converts to a 3 character geohash of gcn. All points in a box around Salisbury will get the same geohash/short code.

Check out this link for a great demonstration of geohashing.

The plan was to add people into a file on the web server using the geohash as the name. The browser client will sweep the viewable area, working out the geohashes and pull down only the data that the user can see. We reduce the overall page weight and don't waste megabytes.

Diving into the unknown.

With the pieces in place, we fired off a quick email to Roifield asking a couple of questions. Possibly we forgot to say "Don't tell anyone about this, yet" because next thing we know he's tweeted his followers to get onboard!

What happened next was a textbook example of what I will call "Initial user testing." People were tweeting post codes, slang, colloquial replies and the Tweetbot just couldn't geocode them all. In the end, we slammed into Twitter's API maximum number of replies/searches and the bot stopped working.

Fixing the UI.

With the Tweet bot in smouldering ruins, we built the UI we should have done originally and allowed people to pin themselves to the map by clicking where they are. All the geocoding and conversational UI problems magically go away and everyone is happy.

We pushed the new release and manually contacted anyone who was in the middle of a Tweetbot transaction when it crashed to encourage them to come over to the web experience.

In total, it probably took longer to communicate to the users than to build the next iteration, but in general everyone was happy!

a11y oops!

Despite the fact that we build WCAG 2.0 compliant websites - have done for decades - in our haste forgot to check how accessible a JavaScript map is. Here's the short answer: it's not in anyway. We were alerted to this fact by some very nice people on Twitter who naturally felt excluded.

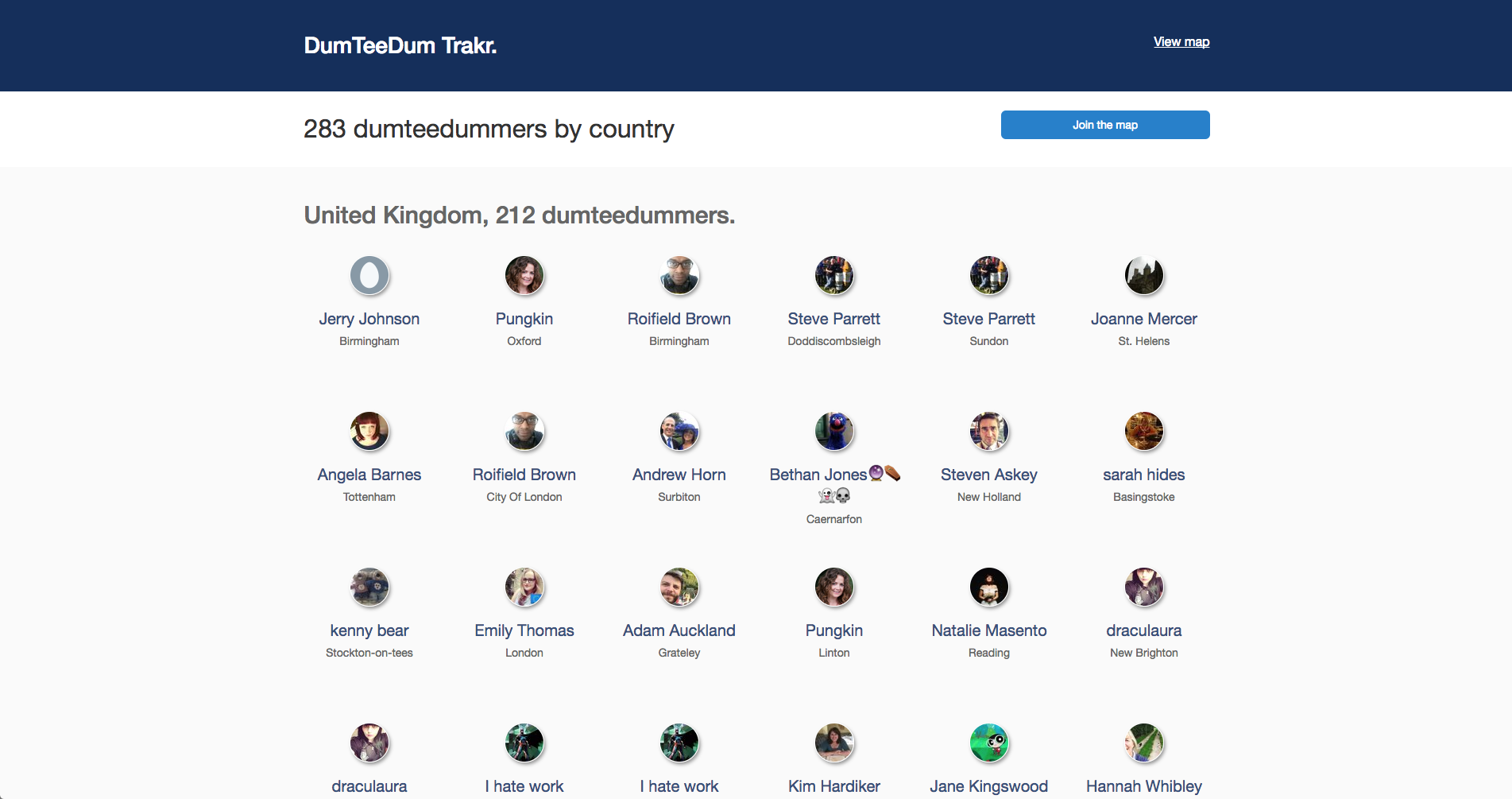

Screen reading the map was out of the question, the next logical step was to create a list based view and create a link from the map.

After this, a simple list based workflow to allow the user to pick country/town (re-using the old geocode functionality from the Tweetbot) and everyone's happy again.

From tiny acorns...

This project was bootstrapped and grown organically. It's still growing and there are hundreds of features we think would be great. The important thing is to build what people want, not what we want.

I'll go into more depth with the technical details next time, explaining how we use event sourcing, a message queue, NoSQL database and all sorts of other things to power the underlying architecture which supports a simple map with some faces on.

Take care,

Adam.